xCOLIBRI-IoT

The COLIBRI toolbox for developing Case-based Explanation Systems for the IoT domain.

About xCOLIBRI-IoT

xCOLIBRI-IoT is an evolution of the COLIBRI plaform focused on the application of CBR for the explanation of intelligent Systems in the Internet of Things domain.

The goal of Explainable Artificial Intelligence (XAI) is "to create a suite of new or modified machine learning techniques that produce explainable models that, when combined with effective explanation techniques, enable end users to understand, appropriately trust, and effectively manage the emerging generation of Artificial Intelligence (AI) systems".

The Internet of Things (IoT) encompasses some of these domains. IoT appeared recently with the objective of exploiting the potential of connected devices. Moreover, these devices can complete intelligent tasks by integrating AI models. Like any other AI system, these AIoT (Artificial Intelligence of the Things) systems must also include explanations to improve the users' trust, especially when the results obtained with this kind of system are crucial in decisive situations.

In this way, the IoT has a very tight relation to AI, as AI models are being applied to the data collected from IoT devices. The impact of the massive data generation and enhancement of communications and technologies is increasing the demand for AI models to make intelligent decisions in the different domains where IoT is being applied. Evidently, intelligent IoT applications have the same requirements regarding explainability as any other standalone AI system. Therefore, including XAI in the IoT can improve these devices' utility if users can better understand how things are learning and acting. We can coin the term XAIoT (eXplainable Artificial Intelligence of Things) to refer eXplainable IA models applied to IoT solutions.

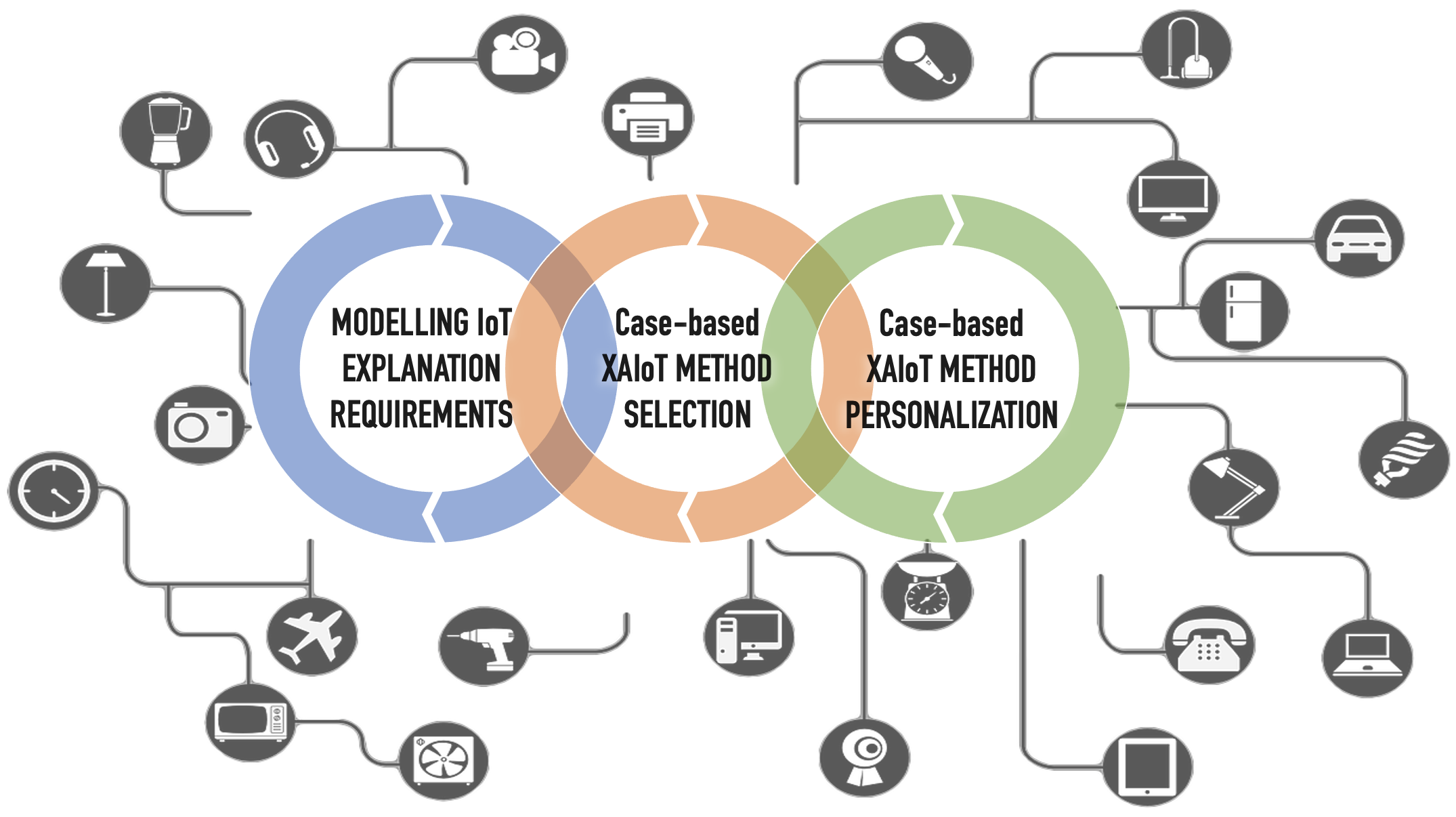

The COLIBRI toolbox for XAIoT is summarized by the figure bellow. It provides tools for: Modelling of explainability requirements in the IoT domain, Selecting the most suitable XAI approch for the given XIoT requirements, and Personalizing the proper XAI method.

Conceptualization and Modelling

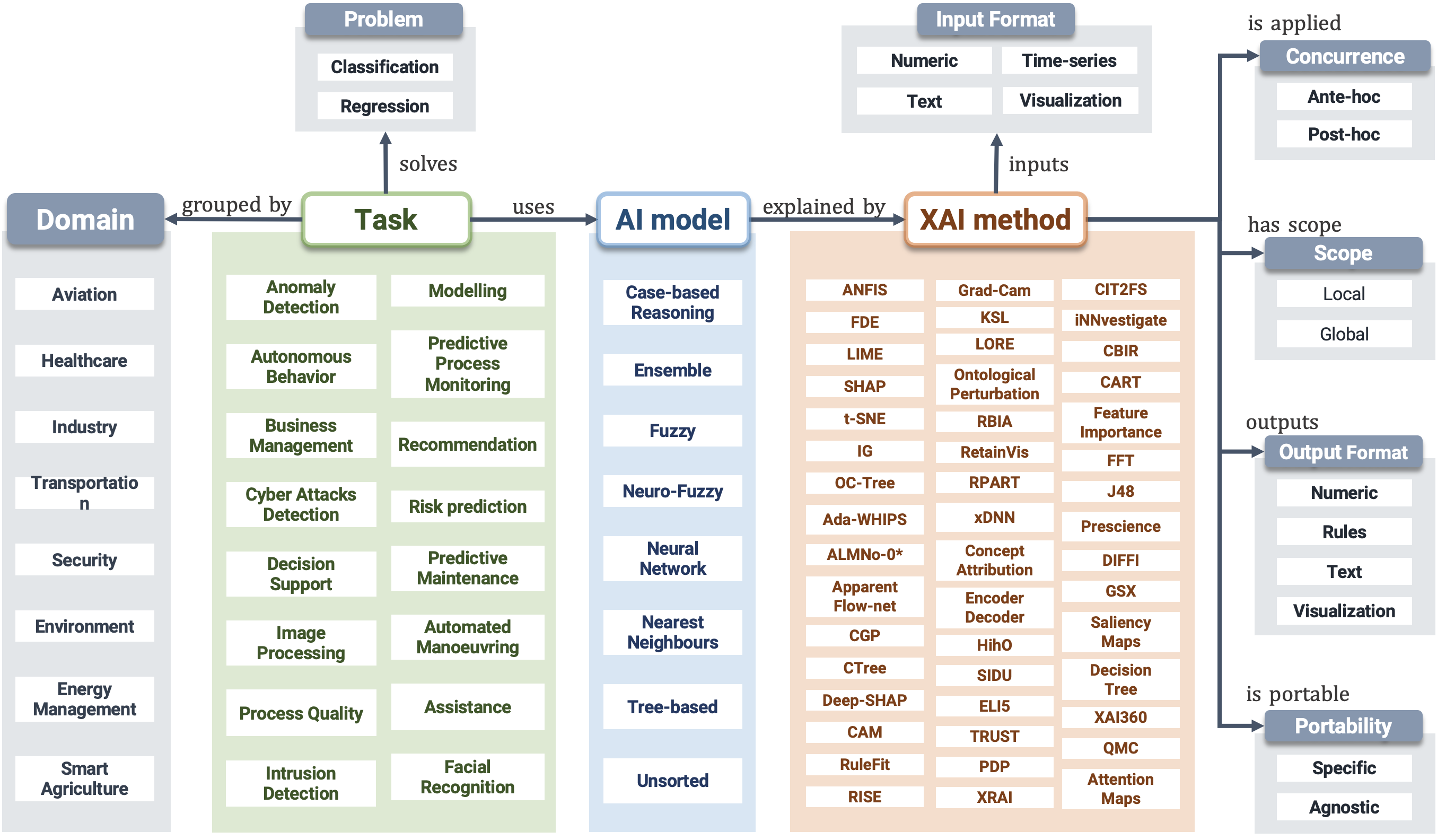

The increasing complexity of data generated by IoT devices makes it difficult for humans to understand the decisions made by the AI models that interpret these data. To address this issue, we propose the XAIoT model, a theoretical framework based on an in-depth study of the state-of-the-art that defines and delimits the features of explainable AI applied to the IoT.

This conceptual model allows developers to examine the different concepts, attributes, requirements, and relationships to consider when developing this type of system.

The ultimate goal of this conceptual model is to provide a framework that guides the selection of the most suitable XAI solution for a given intelligent IoT application.

We propose the conceptual model represented in the figure bellow, where the central concept is the XAIoT task performed and explained. XAIoT tasks are grouped by their application domain and use an AI model. The AI model solves a type of problem and is explained by an XAI method. The model also describes an XAI method through some properties well known in the literature: concurrence, scope, output format, input format, and portability.

The relationships that we defined in our conceptual model are: group by and groups (the relationships between domain and AI task), solves (between problem and AI task), uses and isUsedBy (between AI task and AI model), explained by and explains (between XAI method and AI model), is applied (between XAI method and portability), has scope (between XAI method and scope), outputs (between XAI method and output format), and is portable (between XAI method and portability).

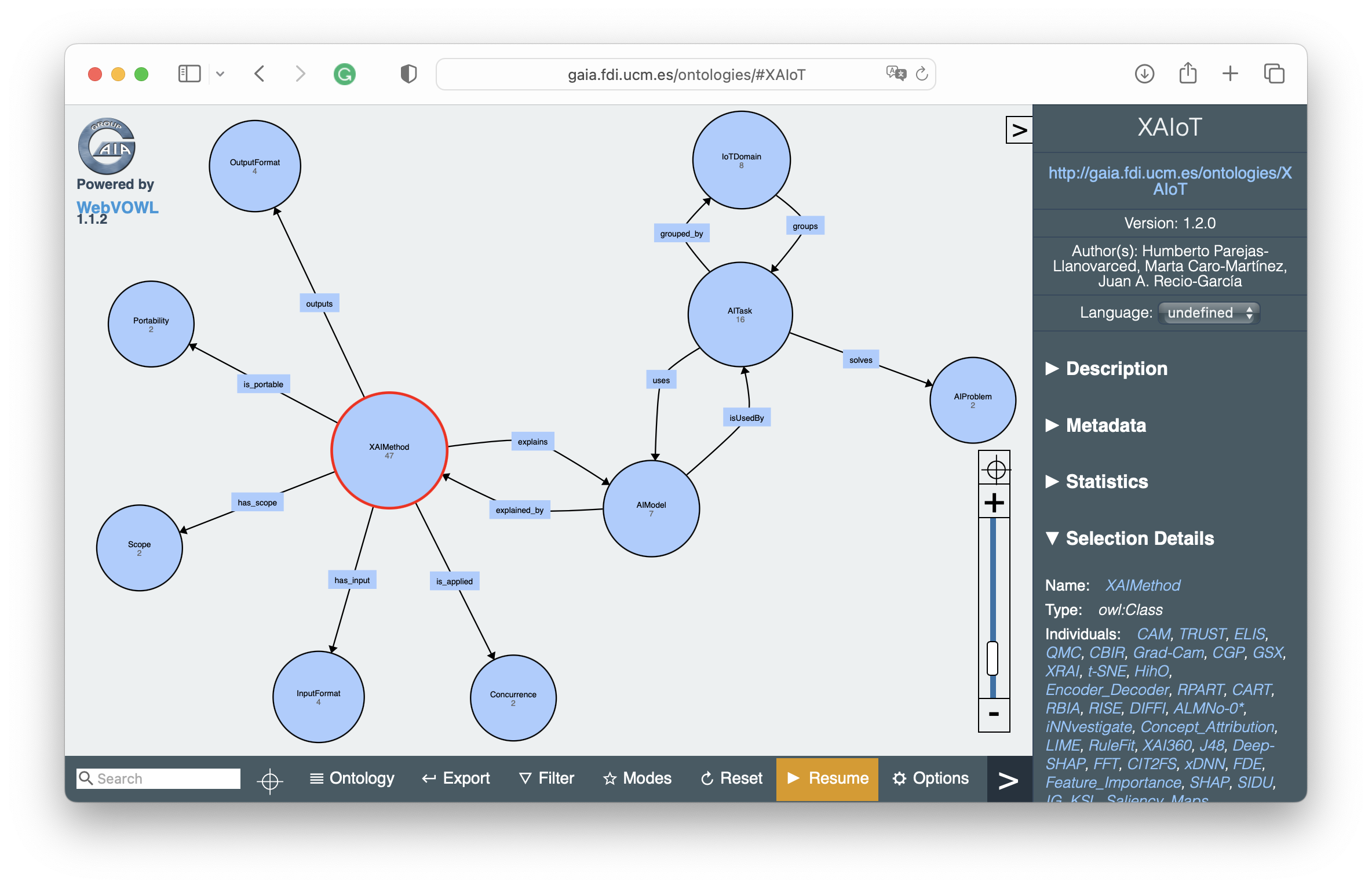

XAIoT-Onto

The XAIoT ontology is the OWL-DL implementation of our conceptual model. The XAIoT ontology encompasses the same concepts and relationships we defined previously, describing the vocabulary defining XAI methods applied to IoT contexts.

The aim of the ontology is to guide developers in designing XAIoT systems. Therefore, we implemented the ontology using OWL, a well-known language for representing complex knowledge in the semantic web, using the DL (description logics) logic language, fragments of first-order logic that allow reasoning using the concepts and relationships described in the ontology. We can describe our ontology by considering some metrics. We have 10 classes (the concepts defined in the conceptual model), 12 object properties that establish the relationships between these classes, and 90 instances that belong to one of our classes.

You can access and explore the XAIoT Ontology in the following link.

XAIoT Ontology

CBR for the selection of eXplanation Methods for the IoT domain

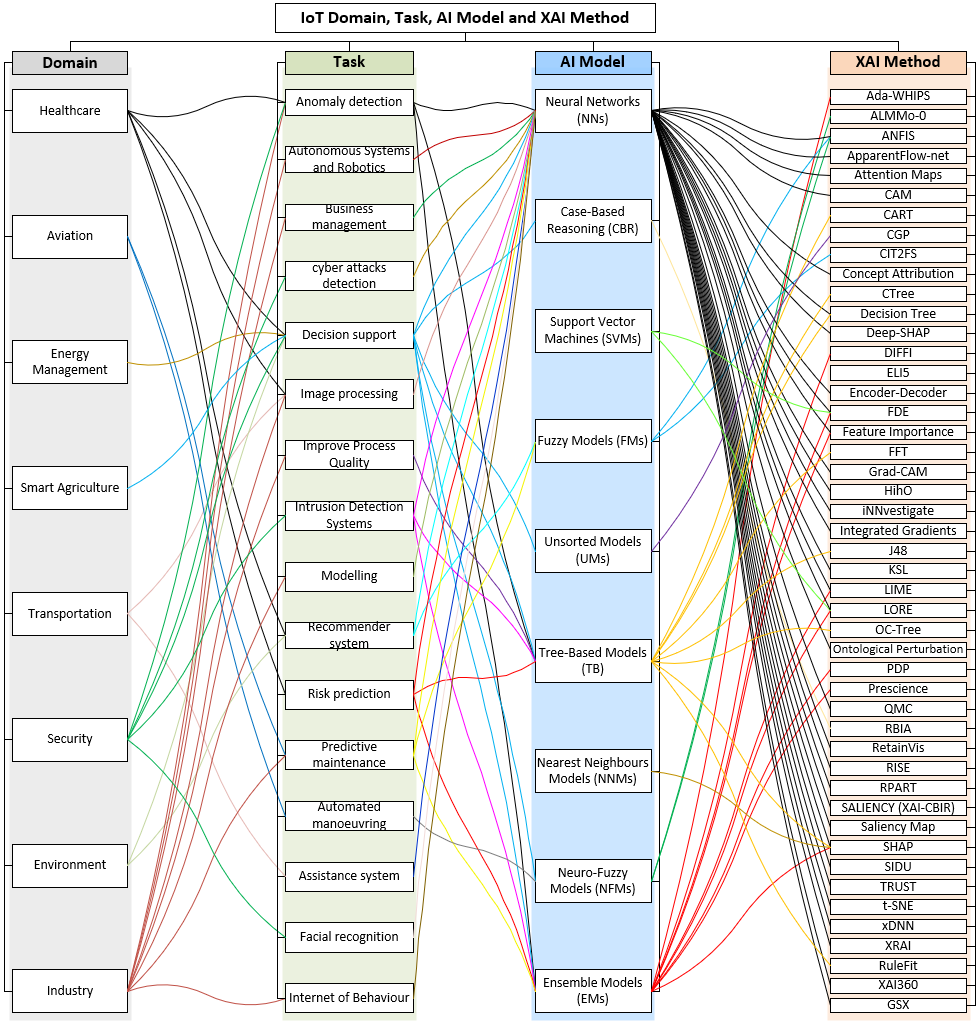

The great amount of different XAI methods that we can find in the literature and the novelty of the AIoT systems make it necessary to support the task of deciding which XAI method is the most adequate for their explanation to users. However, the choice is challenging since designers of XAIoT systems should consider many facets to make the best decision. To address this problem, we present a CBR solution that uses a wide case base of 513 cases extracted from an exhaustive literature review.

The formalization of the case base is rooted in the previous analysis of existing literature on XAI solutions in the IoT domain. From this analysis, we have inferred the different features required to describe a XAIoT experience. In our formalization, the case description defines the XAIoT problem, while the solution determines the method applied to explain that situation. The case base is available at the following repository:

Case base for the the selection of XAI methods in the IoT domain

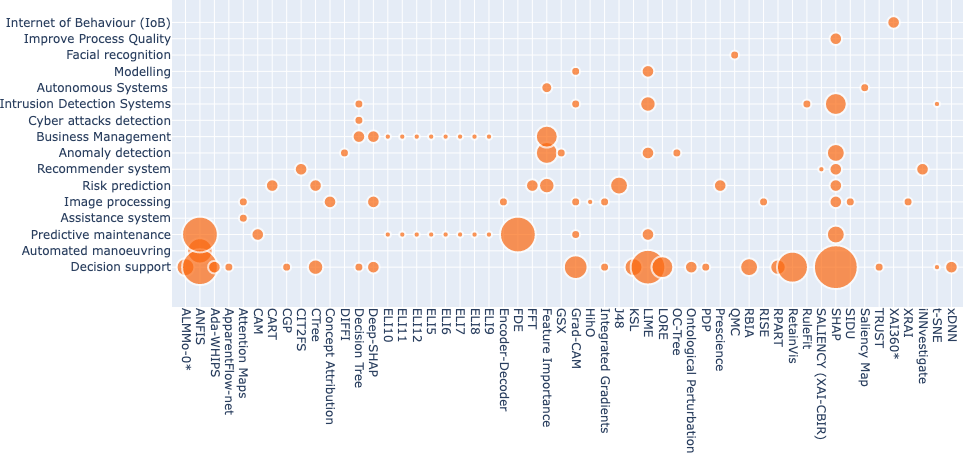

Following picture shows the coverage of the case base. The scatter plot shows the number of cases (bubble size) available in the case base, w.r.t AI Task (y-axis) and XIA Method (x-axis)

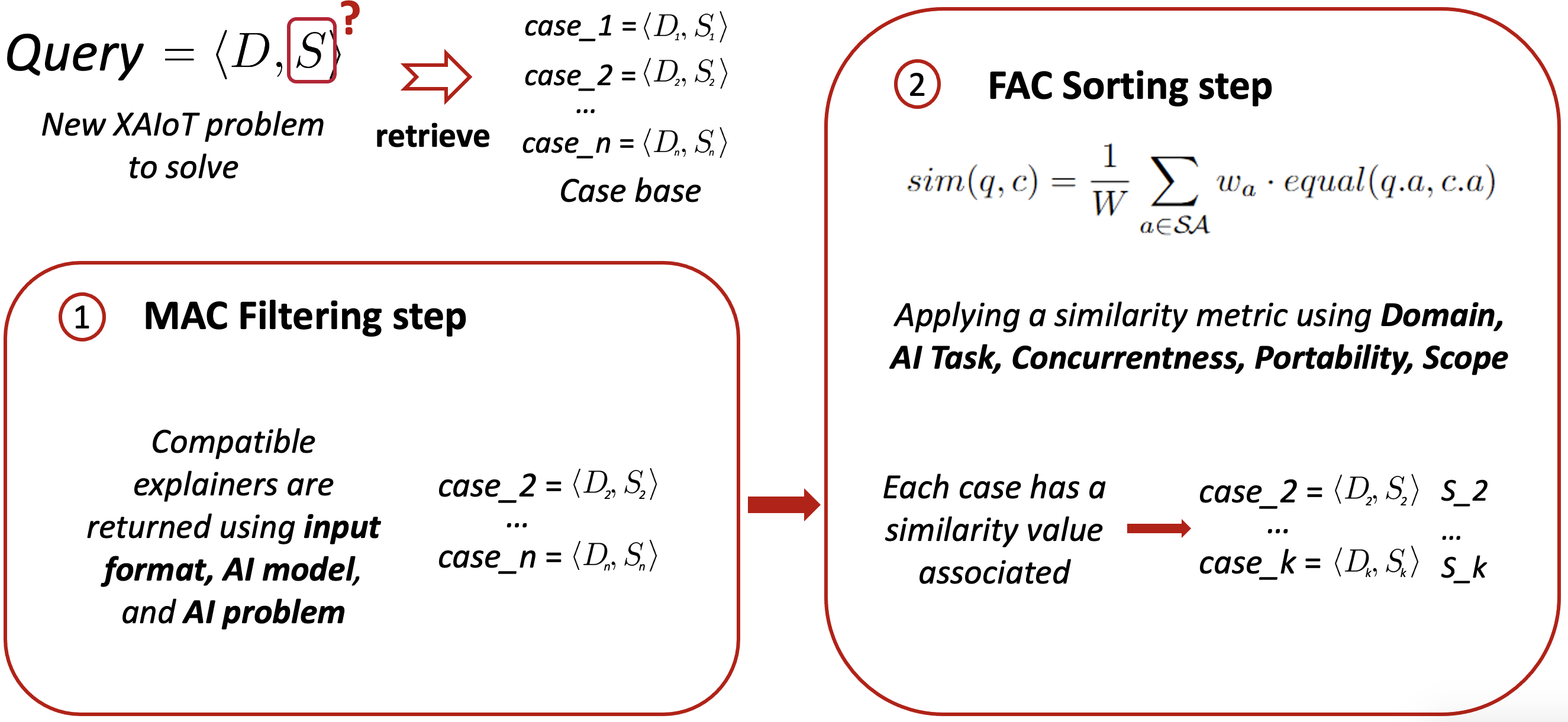

We propose a CBR retrieval process following the MAC/FAC (many-are-called, few-are-chosen) schema.

The filtering step (MAC) is necessary to discard the XAI methods unsuitable for a query and to guarantee that all the retrieved explainers are valid solutions. Therefore, this step filters the compatible XAI methods according to hard restrictions such as the input format, target AI model, or type of AI problem.

The sorting step (FAC) obtains the most similar cases to $q$ using a similarity metric that compares the remaining attributes in the description.

After the MAC/FAC retrieval process, the CBR process includes a reuse step. In this step, the solution and similarity values of the nearest neighbors are used to build a final solution for the query.

CBR for the personalization of eXplanation Methods

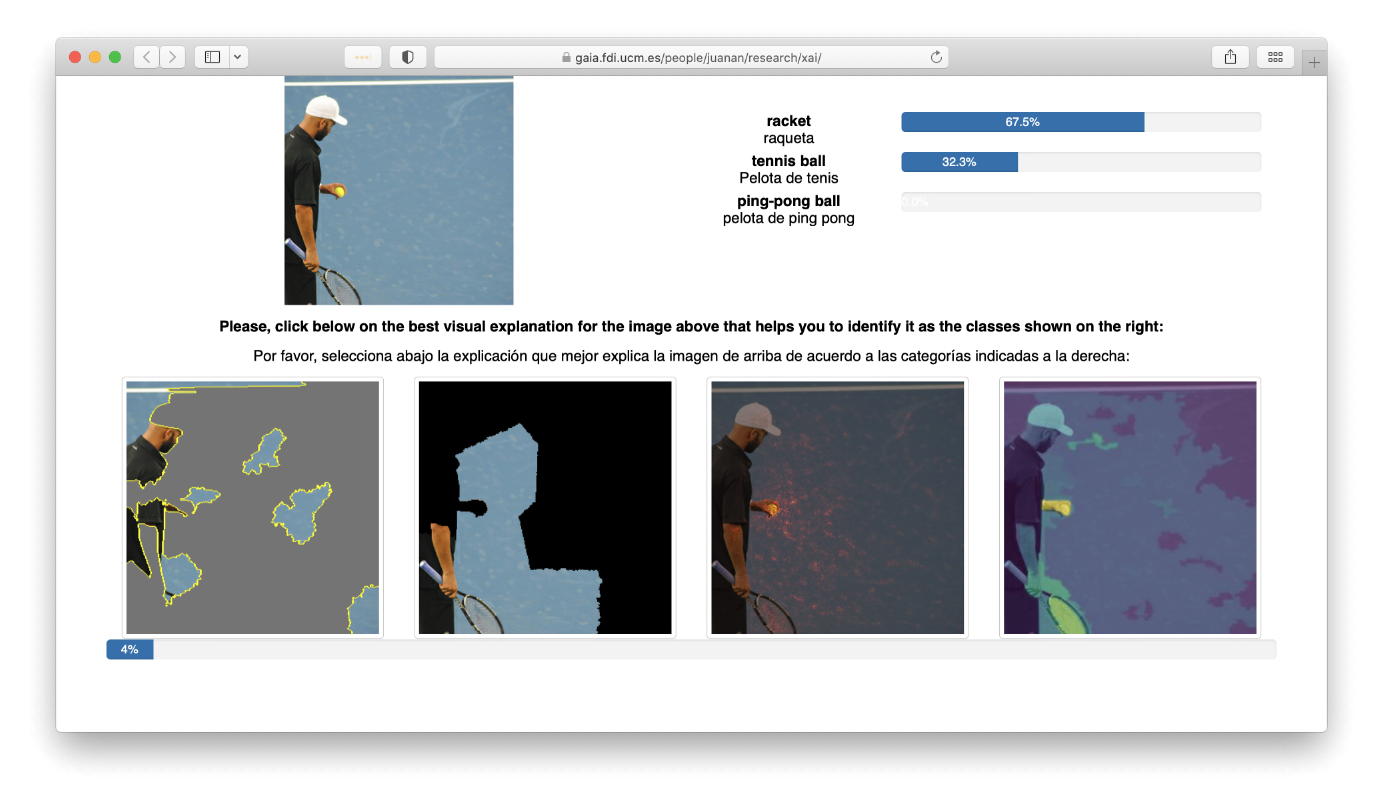

Image classification is one of the most remarkable tasks in IoT systems where Deep Learning (DL) models have demonstrated their significant accuracy to acomplish this task. However, the outcomes of such models are not explainable to users due to their complex nature, having an impact on the users' trust in the provided classifications. To solve this problem several explanation techniques have been proposed, but they greatly depend on the nature of the images being classified and the users' perception of the explanations.

This is the reason why explaining DL techniques to classify images is one of the current research trends in XAI.

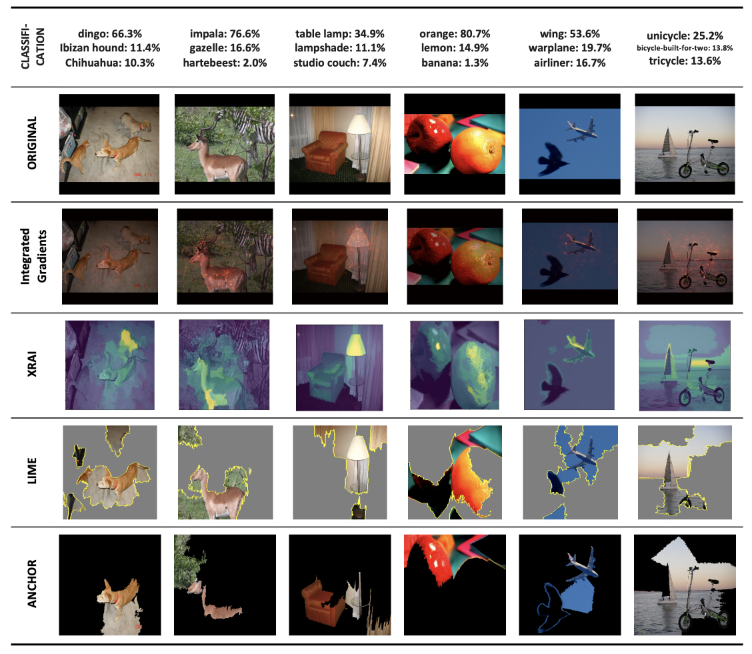

We can find several algorithms for the explanation of image classifiers in current literature, for instance, Local Interpretable Model-Agnostic Explanations (LIME), Integrated Gradients (IG), Anchors, and eXplanation with Ranked Area Integrals (XRAI).

Like other explainable approaches, these algorithms are often specific to a given architecture and their performances greatly depend on the nature of the images being classified. Here appears the challenge of discovering which is the best explanation method to explain the outcome of an image classification model.

Moreover, this challenge raises because the adequacy of a proper explanation method for a given DL image classification model mostly depends on the users' perception of the quality of the explanations. Following image shows several examples, where the optimal explanation may vary according to the user's point of view.

Therefore, the solution to this problem must be rooted in an approach able to capture the human perception of these explanations. Considering these preconditions, the use of Case-based Reasoning (CBR) can be a solution to solve this problem. CBR performs a type of reasoning from previous experiences that, given a new problem, are retrieved and adapted to make a prediction. There are many previous results in exploiting this memory-based technique to generate explanations for other AI models, pointing out the advantage of CBR regarding its suitability to capture and reuse human knowledge to generate and evaluate explanations.

You can access the source code in the following link.

Source code