XAI FOR HIP FRACTURE DETECTION

Explainable AI With Visual and Textual Explanations for Hip Fracture Detection

About XAI FOR HIP FRACTURE DETECTION

The process of detecting hip fractures in radiography involves meticulously analyzing the images for any signs of discontinuity in the bone structure. Some types of fractures can be particularly challenging to identify due to their subtle presentation. An incorrect diagnosis can lead to serious consequences: a false negative may result in an untreated fracture that worsens over time, potentially leading to more severe complications, while a false positive can lead to unnecessary treatments, hospitalizations, and the wastage of medical resources. Accurate diagnosis is therefore critical to ensure proper patient care and resource management.

Current techniques for detecting hip fractures in radiography rely heavily on human expertise. Radiologists and orthopedic specialists use their trained eyes and experience to interpret the subtle nuances in X-ray images. This dependence on human skill introduces variability, as the accuracy of the diagnosis can be influenced by the individual’s level of experience, fatigue, and even the quality of the radiographic equipment. While experienced professionals are generally adept at identifying obvious fractures, more subtle cases can be missed or misdiagnosed. This underscores the critical need for highly trained specialists in the field, as well as the potential benefits of developing advanced diagnostic tools to assist human capabilities.

XAI for Hip Fracture Detection

The students Enrique Queipo de Llano Burgos, Alejandro Paz Olalla, and Marius Ciurcau, led by Prof. Belén Díaz Agudo and Prof. Juan Antonio Recio García, have developed image classification models to detect hip fractures and have implemented visual explanations using Grad-CAM and textual explanations through a system that combines CBR and LLM. This project was part of the Undergraduate Final Project Explainable Artificial Intelligence for Hip Fracture Detection which is published with free access at Docta Complutense.

Additionally, they have published a paper focused on the textual explanations provided by the CBR and LLM system, which was part of the XCBR Workshop at the International Conference on Case-Based Reasoning (ICCBR) 2024 and can be accessed here.

Furthermore, all the code associated with the project is accessible on our GitHub repository MariusCiurcau/TFG-XAI-for-Hip-Fracture-Recognition.

Dataset

We received images from three different sources: the Müller Foundation, the Virgen de la Victoria University Hospital in Málaga, and a dataset from Roboflow called Proximal Femur Fracture.

Due to the diverse data distributions, extensive preprocessing was necessary since some X-rays displayed one or two hips, varying colors, and different sizes. To ensure the model classified based on femur fractures, we removed irrelevant features by automatically cropping the femurs using a tuned YOLOv5 model that achieved a near-perfect F1 score at an 80% confidence threshold.

Femur cropping with YOLOv5

After additional standard image processing tasks like color handling and resizing, we obtained a dataset with three unbalanced classes: class 0 (no fracture), class 1 (neck fracture), and class 2 (trochanteric fracture). We balanced the classes using data augmentation, enabling us to train both binary models (fracture vs. no fracture) and three-class models for detailed fracture classification.

Data collection and preprocessing

Hip Fracture Classification

We have developed hip fracture classification models for both two-class (class 0: no fracture, class 1: fracture) and three-class (class 0: no fracture, class 1: neck fracture, class 2: trochanteric fracture) categorizations using PyTorch. These models are based on a tuned version of ResNet18.

ResNet18 architecture

Both two-class and three-class models achieved great accuracy and recall.

Confusion matrix of the three-class model

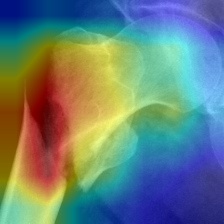

VISUAL EXPLANATIONS WITH GRAD-CAM

We integrated CAM techniques to generate heat maps that highlight the importance of each pixel in the model's prediction. Out of the different available CAM methods, Gradient-Weighted Class Activation Mapping (Grad-CAM) achieved the highest ROADCombined score.

To verify its effectiveness, we consulted an orthopedic doctor who confirmed that the two-class model performed better than the three-class model, as demonstrated in the examples. In the upper left, the two-class model correctly identified the fracture, while the three-class model's focus was displaced.

We also experimented with other visual explanation techniques from the Xplique library, such as GradientInput, LIME, VarGrad, and RISE. However, these methods did not provide explanations as clear as the CAM models, a fact confirmed by the expert. Other examples also show how well the two-class model performs.

Grad-CAM visual explanations for fractured femurs

For non-fractured femurs, we explained predictions by showing a very similar image of a non-fractured femur based on the structural similarity index measure (SSIM), which measures the similarity between images, with values ranging from -1 (not similar) to 1 (identical).

Explanation of a non-fractured femur with SSIM

TEXTUAL EXPLANATIONS WITH CBR AND LLMS

We focused on adding another layer of explainability through text, but we needed a large base of diagnoses, which we lacked. To solve this, we developed a CBR system that helped expand a small set of textual explanations to new images. We performed clustering based on SSIM on all images, and the retrieval phase consists of taking the ResNet image classification, assigning the image to a cluster, and feeding the textual explanation of that cluster to Mistral and GPT-4 to generate new versions tailored to different users, such as students or experts.

CBR system architecture

All this information is displayed in a graphical interface that includes the original image, the visual explanation, the most similar image, and the generated textual explanations.

Graphical user interface

User Evaluation

To check if the system is truly useful, we evaluated it with experts and students from the medical field and developed three experiments: selection of the best visual explanation method=, textual explanation utility rating, and X-ray fracture classification. The results indicated that the Grad-CAM generated by the two-class model is the most effective visual explanation method, and users found the textual explanations to be useful, although they suggested some minor corrections. Additionally, while the model's performance was comparable to that of a student, it highlighted that there is still room for improvement.

Visual explanations experiment

Textual explanations experiment

Image classification experiment

People

Enrique Queipo de Llano

Alejandro Paz Olalla