xCOLIBRI

The COLIBRI toolbox for developing Case-based Explanation Systems.

About xCOLIBRI

xCOLIBRI is an evolution of the COLIBRI plaform focused on the application of CBR for the explanation of intelligent Systems.

The goal of Explainable Artificial Intelligence (XAI) is "to create a suite of new or modified machine learning techniques that produce explainable models that, when combined with effective explanation techniques, enable end users to understand, appropriately trust, and effectively manage the emerging generation of Artificial Intelligence (AI) systems".

From the CBR perspective, research in XAI has pointed out the importance of taking advantage of the human knowledge to generate and evaluate explanations. Therefore, we have created a version of COLIBRI focused on XAI that supports the development of Case-Based Explanation systems. It provides several implementations -either in Java, Python or JavaScript- that can be integrated into existing AI systems to enhance their explainability. These tools have been instantiated into image explanation systems in order to demonstrate and evaluate their suitability.

CBR for the configuration of eXplanation Methods

Research on eXplainable AI has proposed several model agnostic algorithms, being LIME (Local Interpretable Model-Agnostic Explanations) one of the most popular.

LIME works by modifying the query input locally, so instead of trying to explain the entire model, the specific input instance is modified, and the impact on the predictions are monitored and used as explanations. Although LIME is general and flexible, there are some scenarios where simple perturbations are not enough, so there are other approaches like Anchor where perturbations variation depends on the dataset.

We propose a CBR solution to the problem of configuring the parameters of the LIME algorithm for the explanation of an image classifier. The case base reflects the human perception of the quality of the explanations generated with different parameter configurations of LIME. Then, this parameter configuration is reused for similar input images.

This CBR solution was presented at the International Conference on Case-Based Reasoning 2020:

Recio-García J.A., Díaz-Agudo B., Pino-Castilla V. (2020) CBR-LIME: A Case-Based Reasoning Approach to Provide Specific Local Interpretable Model-Agnostic Explanations. In: Watson I., Weber R. (eds) Case-Based Reasoning Research and Development. ICCBR 2020. Lecture Notes in Computer Science, vol 12311. Springer, Cham. https://doi.org/10.1007/978-3-030-58342-2_12

CBR for the selection of eXplanation Methods

Research on eXplainable AI (XAI) is continuously proposing novel approaches for the explanation of image classification models, where we can find both model-dependent and model-independent strategies. However, it is unclear how to choose the best explanation approach for a given image, as these novel XAI approaches are radically different.

We propose a CBR solution to the problem of choosing the best alternative for the explanation of an image classifier. The case base reflects the human perception of the quality of the explanations generated with different image explanation methods. Then, this experience is reused to select the best explanation approach for a given image.

This CBR solution was presented at the International Conference on Case-Based Reasoning 2021:

Recio-García J.A., Parejas-Llanovarced H., Orozco-del-Castillo M.G., Brito-Borges E.E. (2021) A Case-Based Approach for the Selection of Explanation Algorithms in Image Classification. In: Sánchez-Ruiz A.A., Floyd M.W. (eds) Case-Based Reasoning Research and Development. ICCBR 2021. Lecture Notes in Computer Science, vol 12877. Springer, Cham. https://doi.org/10.1007/978-3-030-86957-1_13

CBR for the Composition of eXplanation Methods

This work explores the possibilities of automatically composing XAI methods in order to provide a complete explanation solution. However, XAI methods are not uniform and are provided by several libraries with heterogeneous signatures and requirements. Therefore, we propose to isolate these methods into independent web services but providing a common REST API that uniforms them.

You can access our preliminary prototype in the following link.

Source code

Image Explanation Methods

Research on eXplainable AI (XAI) is continuously proposing novel approaches for the explanation of image classification models, where we can find both model-dependent and model-independent strategies. There are four main methods that are specially relevant nowadays: the first two methods, based on Shapley values, are Integrated Gradient (IG) and Explanation with Ranked Area Integrals (XRAI). There are also two significant methods based in local surrogate models: Local Interpretable Model-Agnostic Explanations (LIME) and Anchors.

However, the execution of these methods is not as simple as it should be. Therefore, we have developed several scripts to generate visual explanations of images' classifications. These scripts provide a simple way to use a common model -Google's Inception v3- with several state-of-the-art explanation methods. These scripts allow to compare the results and support our CBR process that selects the best approach for a given query image.

You can access our scripts in the following link.

Source code

Image Similarity Metrics

A key element in the CBR process is the similarity metric used for the retrieval of similar images from the case base. We have defined three different approaches:

- Pixel-to-pixel. A straightforward method to retrieve similar images is the comparison of the pixel matrix. This similarity metric uses the difference between the pixels of both images

- Histogram correlation. This similarity metric is based on the correlation between the color histograms of the images.

- Structural similarity index (SSIM). This metric compares the structural changes in the image. It has demonstrated good agreement with human observers in image comparison using reference images. The SSIM index can be viewed as a quality measure of one of the images being compared, provided the other image is regarded as of perfect quality. It combines three comparison measurements between the samples of x and y: luminance l, contrast c and structure s:

You can access our code in the following link.

Source code

XAI Visualization

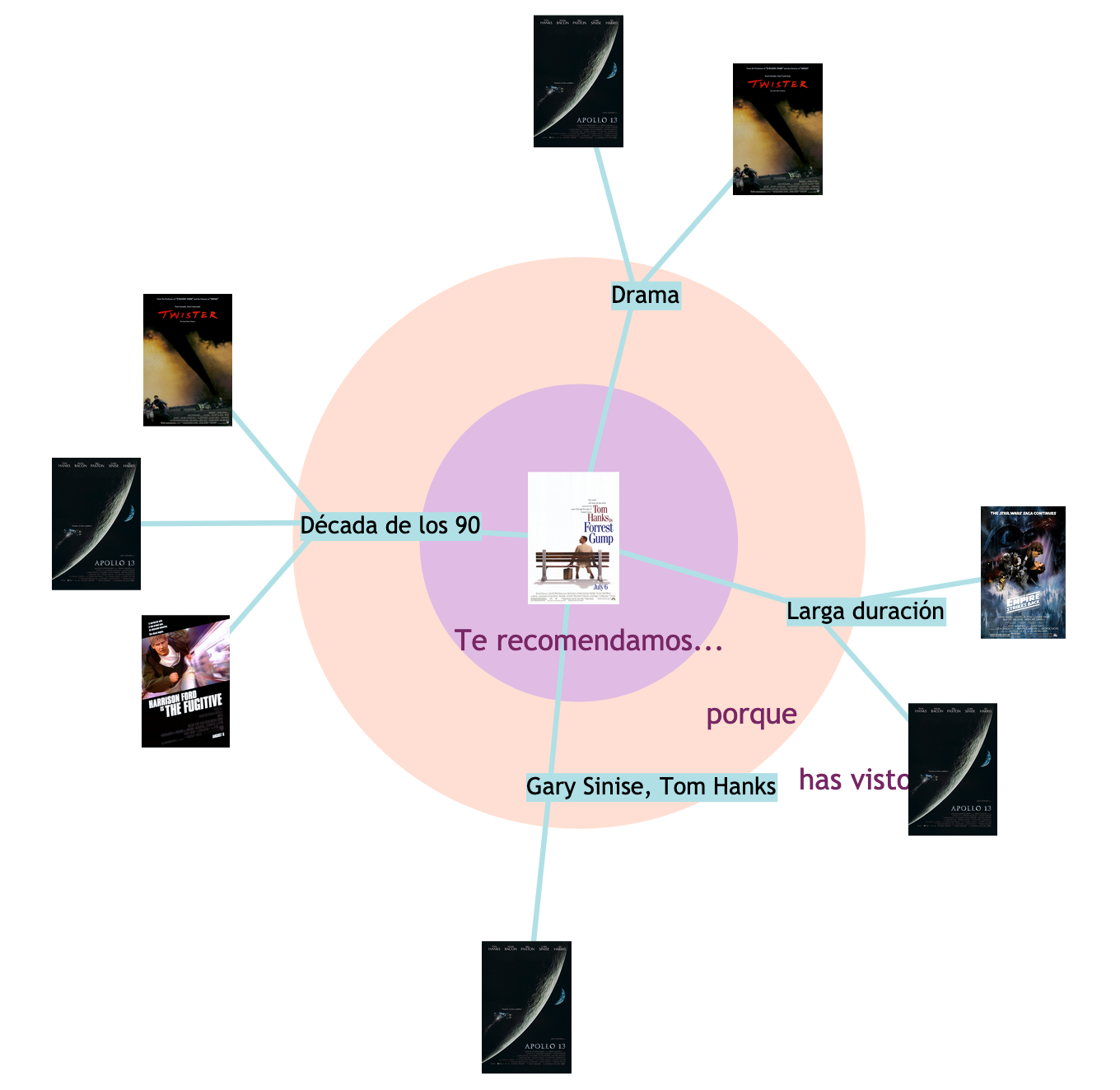

Explanations in recommender systems are a requirement to improve users' trust and experience. Traditionally, explanations in recommender systems are derived from their internal data about ratings, item features, and user profiles. However, this information is not available in black-box recommender systems that lack enough data transparency. To leverage this knowledge requirement, this work proposes a surrogate explanation-by-example method for recommender systems based on knowledge graphs only requiring information about the interactions between users and items.

Through the proper transformation of these knowledge graphs into an item-based and user-based structures, link prediction techniques are applied to find similarities between the nodes and to identify explanatory items for the recommendation given to the user by the black-box system.

You can access our code and an online demo in the following link.

Source code

Online demo (in Spanish)